No audio/video ? ... Just implement the damn plugin !

November 10, 2025

Having no experience with Rust at the time, I had the idea to write a music player app that could also download music. Several frameworks exist, and I chose Tauri which is the equivalent of Electron but written in Rust.

Development was going pretty well until I wanted to play an audio file. The simplest approach is to play the file from the frontend because of the web engine. Tauri defines a custom protocol that makes file playback easier, which lets us do:

import { appDataDir, join } from "@tauri-apps/api/path";

import { convertFileSrc } from "@tauri-apps/api/core";

const appDataDirPath = await appDataDir();

const filePath = await join(appDataDirPath, "assets/video.mp4");

const assetUrl = convertFileSrc(filePath);

const video = document.getElementById("my-video");

const source = document.createElement("source");

source.type = "video/mp4";

source.src = assetUrl;

video.appendChild(source);

video.load();Example from the Tauri documentation

But something odd happens, the file doesn't play and an error shows up:

Unhandled Promise Rejection: NotSupportedError: The operation is not supported

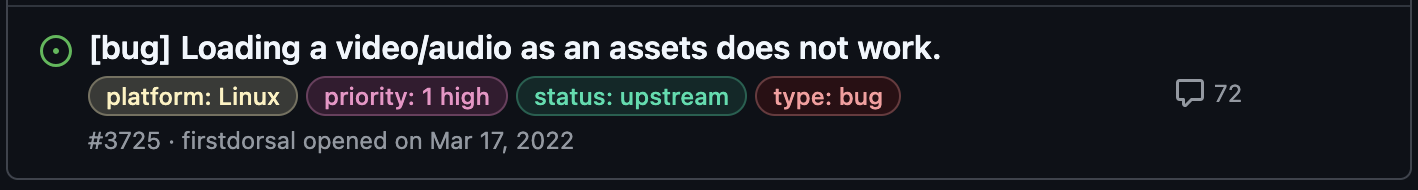

Being able to play an audio/video file is fairly common for an application, so I started looking for a GitHub issue and ...

oh boy ...

This problem seems to have existed for 3 years, and lots of people seem to have run into it, so we're dealing with a big one.

When a problem appears, people usually wait for that guy that will give a patch, or for someone to propose a workaround. For audio files, you can play from the backend using a library like rodio (which is what I did in my app), but for video it gets trickier because you'd have to send each frame from the backend to the frontend efficiently...

One possible solution is to read the raw file and create a blob (Binary Large Objects):

import { readBinaryFile } from "@tauri-apps/api/fs"

const [urlAudio, setUrlAudio] = useState<string>("")

return (

<>

<Button

onClick={() => {

const filePath = "imafile.mp3" // or *.mp4

void readBinaryFile(filePath)

.catch((err) => {

console.error(err)

})

.then((res) => {

const fileBlob = new Blob(

[res as ArrayBuffer],

{ type: "audio/mpeg" }

)

const reader = new FileReader()

reader.readAsDataURL(fileBlob)

const url = URL.createObjectURL(fileBlob)

setUrlAudio(url)

})

}}

>

Im a button

</Button>

<audio controls>

<source src={urlAudio} type="audio/mpeg" />

</audio>

</>

)But that hits its limits when you want to play large videos (> 1 GB).

Another solution I find really wild is going through a local HTTP server.

Among all these tricks, I wasn't satisfied, so I dug deeper.

WebKitGTK

WebKitGTK is the WebKit port for GTK on Linux.

Behind Tauri are different embedded web engines depending on the OS, including WebKitGTK on Linux. WebKitGTK has a bugzilla and after searching, I came across a post from 2015 (10 years from today !!!) describing the same issue. One person managed to write a minimal code:

#include <gtk/gtk.h>

#include <webkit2/webkit2.h>

static void uri_scheme_request_cb(

WebKitURISchemeRequest *request,

gpointer user_data

)

{

[...]

stream = g_file_read(file, NULL, &err);

if (err == NULL)

{

GFileInfo *file_info = g_file_query_info(file, "standard::*",

G_FILE_QUERY_INFO_NONE,

NULL, &err);

if (file_info != NULL)

stream_length = g_file_info_get_size(file_info);

else

{

g_error("Could not get file info: %s\n", err->message);

g_error_free(err);

return;

}

webkit_uri_scheme_request_finish(

request, G_INPUT_STREAM

(stream), stream_length,

g_file_info_get_content_type(file_info)

);

g_object_unref(stream);

g_object_unref(file_info);

}

else

{

webkit_uri_scheme_request_finish_error (request, err);

g_error_free (err);

}

}

int main(int argc, char *argv[])

{

WebKitWebContext *ctx;

ctx = webkit_web_context_new();

webkit_web_context_register_uri_scheme(

ctx, "custom",

(WebKitURISchemeRequestCallback)uri_scheme_request_cb,

NULL, NULL

);

[...]

return 0;

}This code adds a handler for when a link with custom:// is requested. The handler reads the file and passes it to WebKit via the webkit_uri_scheme_request_finish function.

With that in hand, I dove into long and terrifying debugging on a massive codebase full of asynchrony...

For those who want to build WebKit, be aware you need at least 2 GB of RAM per CPU core if you build in parallel (make -j ...).

One of my issues was that I wasn't hitting my breakpoints. This is explained by WebKitGTK doing multi-processing, so the debugger can't attach to the right PID. I had to grab it using the various debug logs. I don't know why, but in my case gdb was unstable with WebKit (memory leak, crashes), so I switched to lldb, which worked much better.

In any case, after these struggles, I was able to understand that GStreamer was used for media playback, and that's where the problem came from.

For those unfamiliar with GStreamer, it's a library for processing multimedia streams as pipelines, maintained by the GNOME project.

Having never used GStreamer, I felt a bit lost in the complexity of the library; I didn't know which direction to go. I then innocently went to the GStreamer repo to open an issue that was immediately closed:

I didn't have time to look into it further, so I put it aside and went on with my life.

Fast forward to 2025

I finished my studies and I had a bit of downtime and figured it was the right moment to resume the investigation, knowing the problem still existed.

Before continuing, let's recap:

asset://,

for example asset://path/to/file.mp4, it's passed to

GStreamer but it doesn't know how to handle it because there's

no handler.

In the ticket I mentioned earlier, someone pointed me to a GStreamer unit test that registers a handler. At first I didn't understand much; after digging through the code, I found a snippet like:

static gboolean

gst_red_video_src_uri_set_uri (GstURIHandler * handler,

const gchar * uri,

GError ** error)

{

...

}

static void

gst_red_video_src_uri_handler_init (gpointer g_iface,

gpointer iface_data)

{

...

}And that's exactly what I needed to create a handler for my asset:// !

It took me a while to realize that in my case I had to write a GStreamer plugin. When I managed to debug with WebKit, I eventually hit the error code GST_CORE_ERROR_MISSING_PLUGIN. Remembering that helped me understand I needed to write a plugin.

How to write a GStreamer plugin (non-exhaustive)

There is documentation specifically about this. And the unit test I found was a good complement for what I wanted to do.

The plugin's main idea is to have a set_uri that retrieves the file path, opens it, and feeds it into a pipeline that processes the stream to output video/audio.

The idea is to have a plugin so that we can, via a command, do:

gst-launch-1.0 uridecodebin uri=asset:///home/user/video.mp4

! autovideosink

uridecodebinis a GStreamer element that automatically builds a decoding pipeline based on the media type derived from the provided URI.

autovideosinkis a GStreamer element that displays the video in a window.

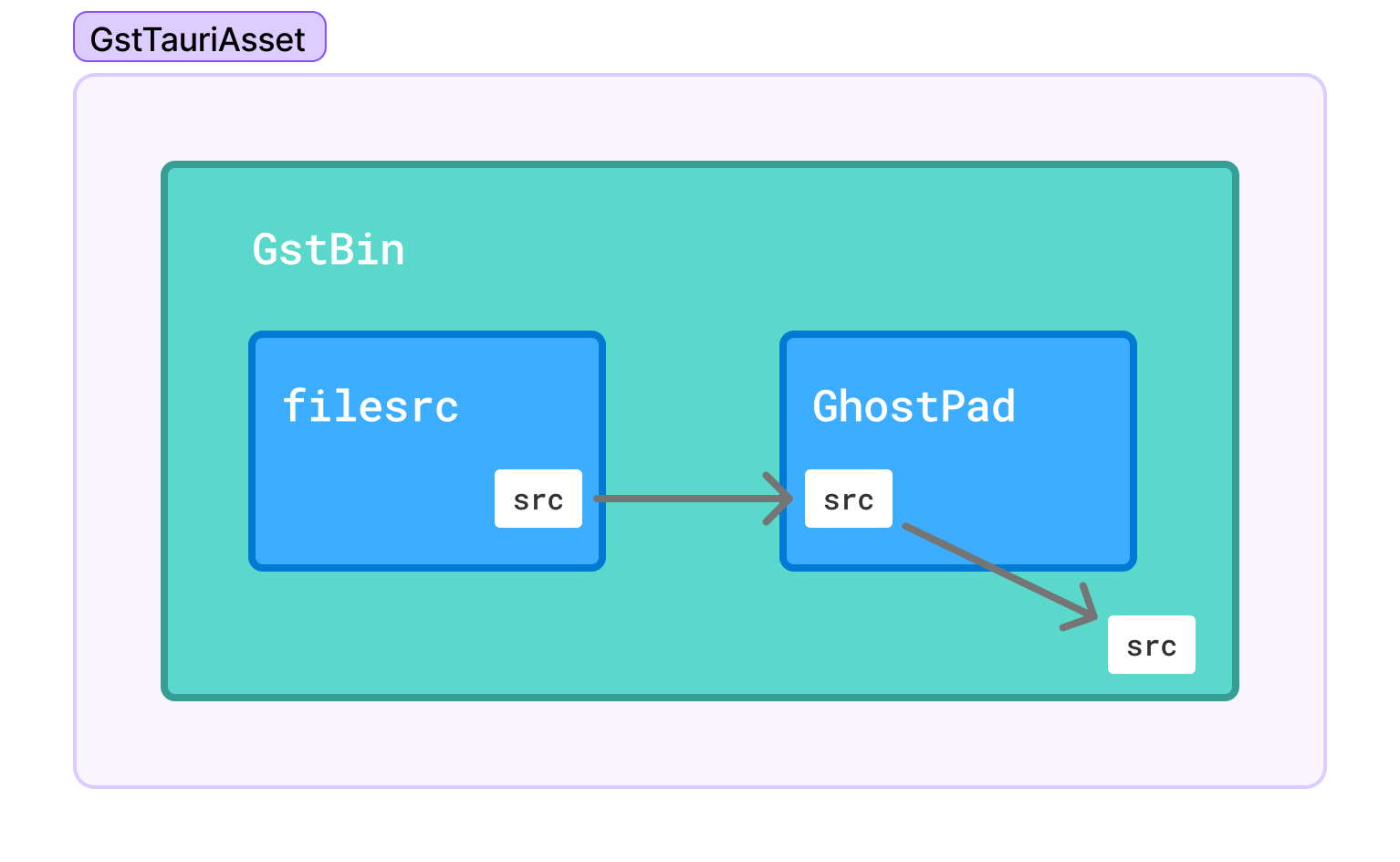

To achieve this, the plugin's internal pipeline is as follows:

We have our first element, filesrc, which opens the file, then exposes the source via a GhostPad. All of this is done in the set_uri function.

Feel free to share your idea if you think mine is missing something !

After writing and compiling the plugin, GStreamer needs to be able to find it. The easiest way is to set the GST_PLUGIN_PATH environment variable to the folder containing the compiled plugin, and with that, we have:

Terry Davis contacting the CIA with my awesome plugin

Victory!!!

Contributing to Tauri

Now that we have something working, I can finally submit my Pull Request to Tauri. During my tests I wrote the plugin in C, and since Tauri is written in Rust, I had to rewrite the plugin in Rust using the gstreamer-rs library. During this rewrite, the experience was so much nicer than in C, thanks to Rust features (macros, traits/interfaces, typing) compared to C, where almost everything is done with macros.

The Pull Request is available here. After discussion with one of the maintainers on Discord, It should be accepted soon.

Thanks for reading this far!

Afterthought

After writing the plugin, I thought the problem could have been fixed on the WebKit side. But it feels natural to write a GStreamer plugin to specifically handle this case where Tauri (or another library) wants specific behavior for a custom protocol. However, I find it unnatural to both use the WebKit API to register your handler AND then write a GStreamer plugin to handle media for that specific WebKit handler.

I didn't mention it anywhere in the blog, but the issue doesn't exist on macOS/iOS and Windows. On Windows, this can be explained because WebView2 is used. On macOS/iOS, WebKit is used. Which suggests a solution could likely be found on the WebKitGTK side by analyzing how WebKit behaves. That was on my mind during my research, but I didn't dig further into that path. I'll return to it when I have more time and will probably write another blog post on the topic.